SGRv2 trained on 4 colors (white, red, yellow, orange),

Success.

Success.

Success.

Success.

Success.

Failure. Pick the wrong object.

Failure.

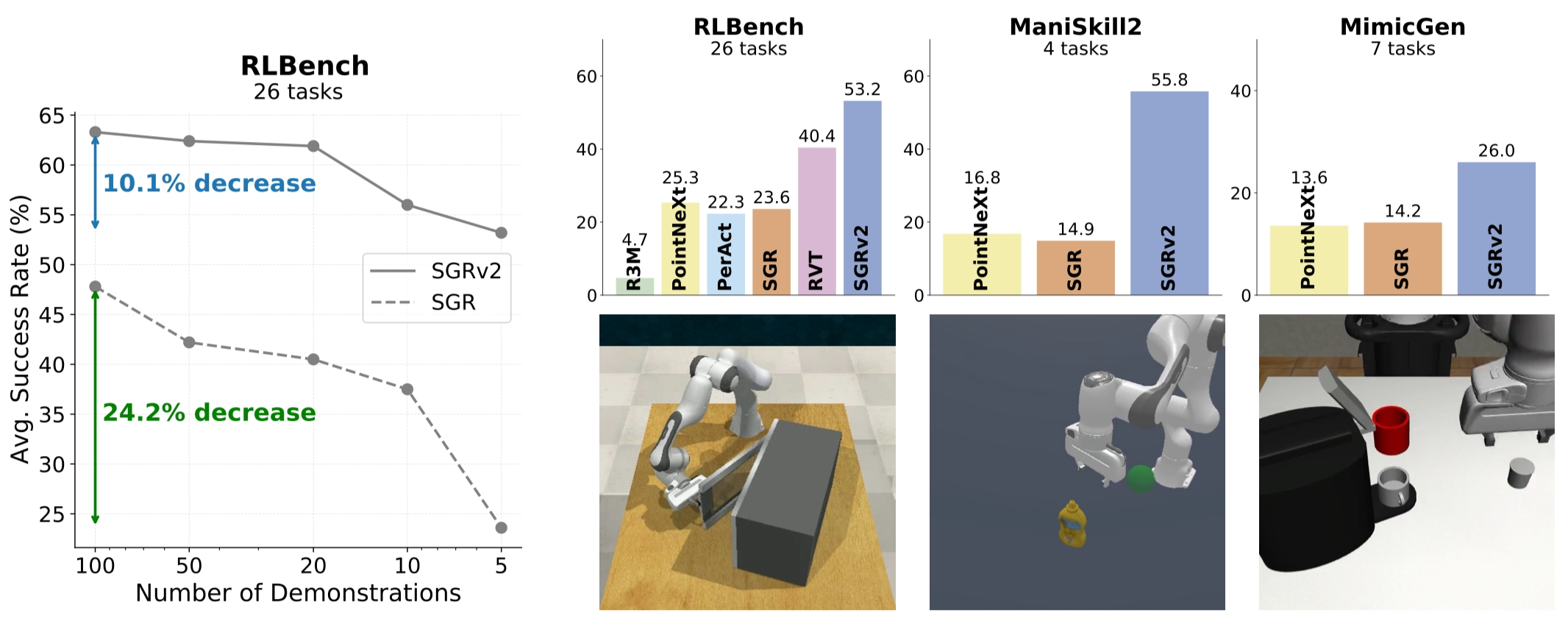

Given the high cost of collecting robotic data in the real world, sample efficiency is a consistently compelling pursuit in robotics. In this paper, we introduce SGRv2, an imitation learning framework that enhances sample efficiency through improved visual and action representations. Central to the design of SGRv2 is the incorporation of a critical inductive bias—action locality, which posits that robot's actions are predominantly influenced by the target object and its interactions with the local environment.

Extensive experiments in both simulated and real-world settings demonstrate that action locality is essential for boosting sample efficiency. SGRv2 excels in RLBench tasks with keyframe control using merely 5 demonstrations and surpasses the RVT baseline in 23 of 26 tasks. Furthermore, when evaluated on ManiSkill2 and MimicGen using dense control, SGRv2's success rate is 2.54 times that of SGR. In real-world environments, with only eight demonstrations, SGRv2 can perform a variety of tasks at a markedly higher success rate compared to baseline models.

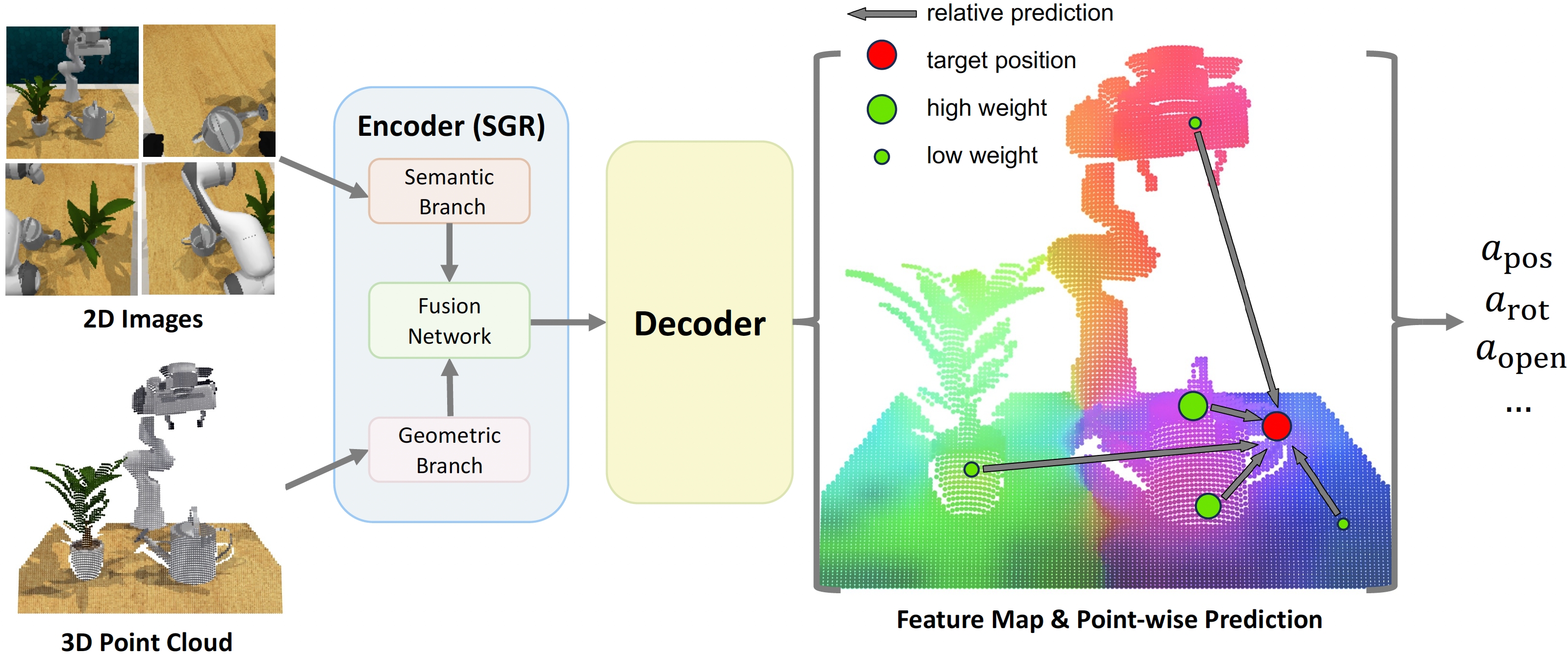

SGRv2 Architecture. Built upon SGR, SGRv2 integrates locality into its framework through four primary designs: an encoder-decoder architecture for point-wise features, a strategy for predicting relative target position, a weighted average for focusing on critical local regions, and a dense supervision strategy (not shown in the figure). This illustration specifically represents the watering plants task. For simplicity in the visualization, we omit the proprioceptive data that is concatenated with the RGB of the point cloud before being fed into the geometric branch.

@article{zhang2024leveraging,

title={Leveraging Locality to Boost Sample Efficiency in Robotic Manipulation},

author={Zhang, Tong and Hu, Yingdong and You, Jiacheng and Gao, Yang},

journal={arXiv preprint arXiv:2406.10615},

year={2024}

}